- By Promotica

- April 30, 2025

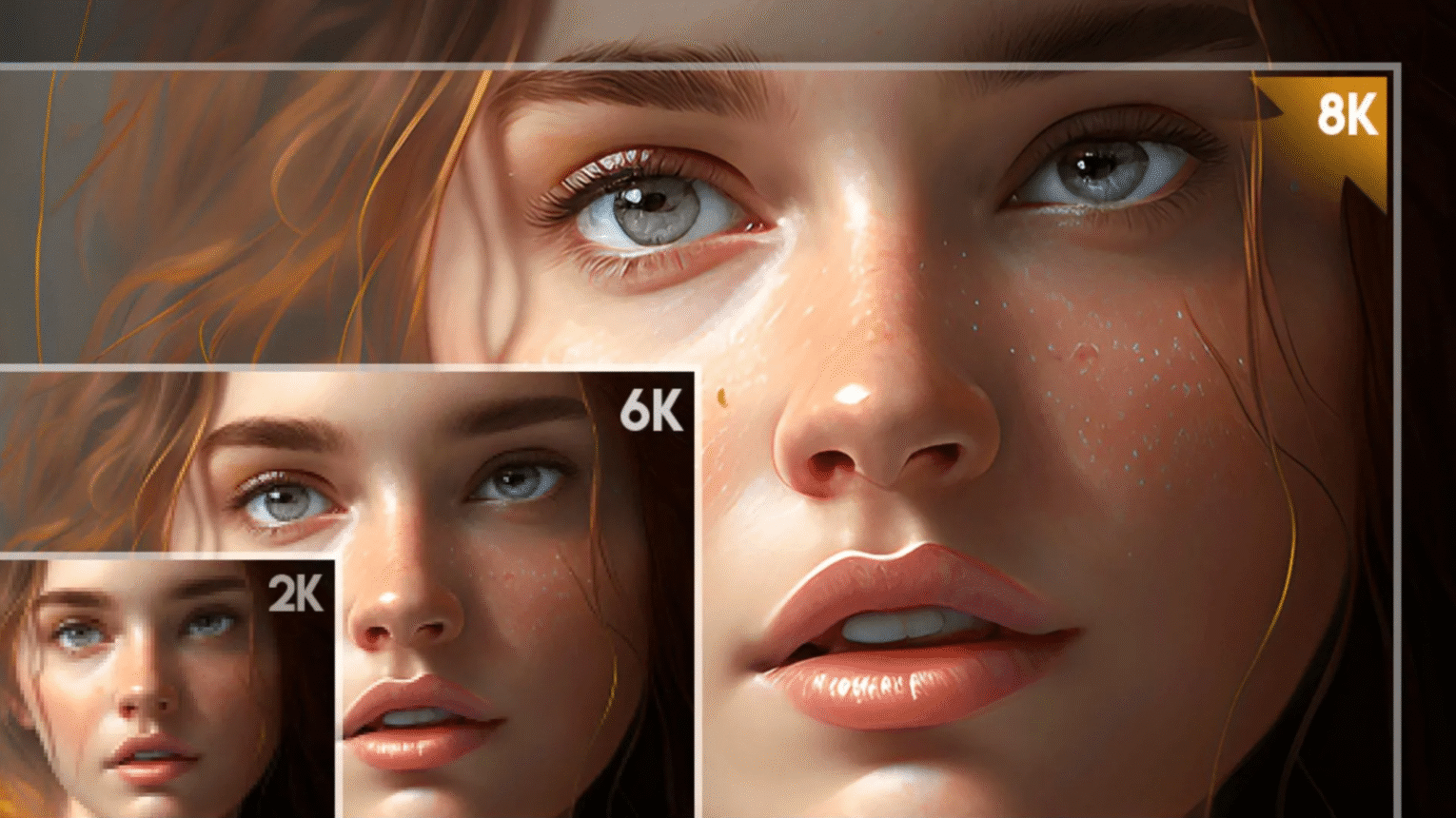

Upscaling an image without quality loss is a big challenge—because you’re asking software to invent new detail from limited information. Here’s a technical breakdown of how modern AI-based upscaling works:

🔍 Traditional vs. AI-Based Upscaling

🟠 1. Traditional Upscaling (e.g., Bicubic, Bilinear)

Just stretches the pixels and smooths the gaps.

Doesn’t add new detail—just blurs or sharpens what’s already there.

Result: often blurry or pixelated.

🧠 AI-Based (Super-Resolution) Upscaling

AI upscaling (like in tools such as Topaz Gigapixel, ESRGAN, or Real-ESRGAN) reconstructs detail using a trained neural network.

💡 Key Idea:

The AI has seen millions of low-res + high-res image pairs during training. It learns what real high-res details usually look like and uses that knowledge to “hallucinate” convincing detail in low-res images.

🔧 How It Works – Step-by-Step:

1. Training Phase

AI is trained on many image pairs:

📉 Low-res version

📈 High-res ground truthThe network learns to minimize the difference between its output and the real high-res version.

2. Inference Phase (When You Use It)

You give it a low-resolution image.

It analyzes textures, edges, and patterns.

Based on what it learned, it generates plausible high-resolution details, such as:

Sharper eyes or hair strands in faces

Cleaner text on signs

More texture on clothing or surfaces

🔬 Techniques Used

Convolutional Neural Networks (CNNs): To extract and build features.

Generative Adversarial Networks (GANs): One network generates detail, the other judges realism.

Perceptual Loss: Instead of just pixel difference, AI compares features from deep neural layers (e.g., VGG network), so the result “looks” more realistic to humans.

🎯 Result:

You get an upscaled image that looks like it had more resolution to begin with—even though the AI is effectively guessing what was lost.